Welcome to the Biomedical Research Computing Facility (BRCF) Users wiki! Formerly known as the Research Computing Task Force (RCTF), the BRCF now has an official organizational home in the Center for Biomedical Research Support (CBRS).

| Info |

|---|

| title | Upcoming Maintenance |

|---|

|

- The next regular BRCF POD maintenance will take place Tuesday September 21, 2021, 8am - 6pm

- The next EDU POD maintenance will take place Thursday September 23, 2021, 3pm - 6pm

|

Quick links

Architecture Overview

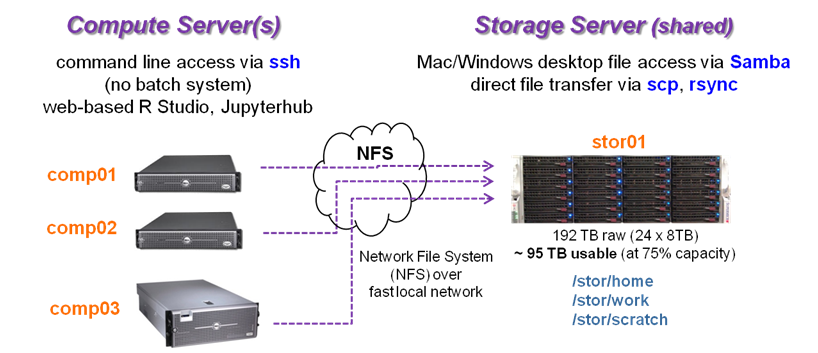

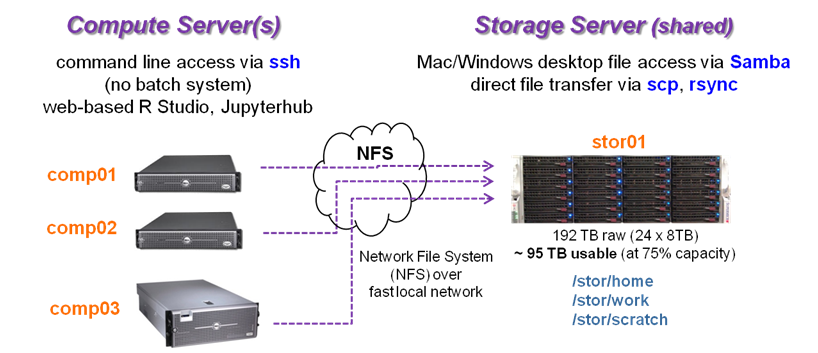

BRCF provides local, centralized storage and compute systems called PODs. A POD consists of one or more compute servers along with a shared storage server. Files on POD storage can be accessed from any server within that POD.

A graphic illustration of the BRCF POD Compute/Storage model is shown below:

Features of this architecture include:

- A large set of Bioinformatics software available on all compute servers

- interactive (non-batch) environment supports exploratory analyses and long-running jobs

- web-based R Studio and JupyterHub servers implemented upon request

- Storage managed by the high-performance ZFS file system, with

- built-in data integrity, far superior to standard Unix file systems

- large, contiguous address space

- automatic file compression

- RAID-like redundancy capabilities

- works well with inexpensive, commodity disks

- Appropriate organization and management of research data

- weekly backup of Home and Work to spinning disk at the UT Data Center (UDC)

- periodic archiving of backup data every 4-6 months to TACC's ranch tape archive system (~once/year)

- Centralized deployment, administration and monitoring

- OS configuration and 3rd part software installed via Puppet deployment tool

- global RCTF user and group IDs, deployable to any POD

- self-service Account Management web interface

- OS monitoring via Nagios tool

- hardware-level monitorig monitoring via Out-Of-Band Management (OOBM) interfaces

BRCF Architecture goals

The Biomedical Research Computing Facility (BRCF) is a working group of IT-knowledgeable UT staff and students from CSSB, GSAF and CCBB. With assistance from the College of Natural Sciences Office of Information Technology (CNS-OIT), BRCF has implemented a standard hardware, software and storage architecture, suitable for local research computing, that can be efficiently and centrally managed.

Broadly, our goals are to supplement TACC's offerings by providing extensive local storage, including backups and archiving, along with easy-access non-batch local compute.

Before the BRCF initiative, labs had their own legacy computational equipment and storage, as well as a hodgepodge of backup solutions (a common solution being "none"). This diversity combined with dwindling systems administration resources led to an untenable situation.

The Texas Advanced Computing Center (TACC) provides excellent computation resources for performing large-scale computations in parallel. However its batch system orientation is not well suited for running smaller, one-off computations, for developing scripts and pipelines, or for executing very long-running (> 2 days) computations. While TACC offers a no-cost tape archive facility (ranch), its persistent storage offerings (corral, global work file system) can be expensive and cumbersome to use for collaboration.

The BRCF POD architecture has been designed to address these issues and needs.

- Provide adequate local storage (spinning disk) in a large, non-partitioned address space.

- Implement some common file system structures to assist data organization and automation

- Provide flexible local compute capability with both common and lab-specific bioinformatics tools installed.

- Augment TACC offerings with non-batch computing environment

- Robust and "highly available" (but 24x7 uptime not required)

- Provide automated backups to spinning disk and periodic data archiving to TACC's ranch tape system.

- Target "sweet spot" of cost-versus-function commodity hardware offerings

- Aim for rolling hardware upgrades as technology evolves

- Provide centralized management of IT equipment

- Automate software deployment and system monitoring

- Make it easy to deploy new equipment

Recent Changes

| Info |

|---|

| title | Important changes to POD compute servers |

|---|

|

The operating system on all POD compute servers have been upgraded from Ubuntu Linux 14.04 to Ubuntu 18.04. Please see Summer 2019 OS Upgrades for how this change may affect you.

|

| Info |

|---|

| title | Important changes to POD access from off campus |

|---|

|

Per a directive from the UT Information Security Office (ISO), SSH access using passwords from outside the UT campus network has now been blocked. Please see the POD access discussion for more information.

|